Resilient, Scalable, and Secure,

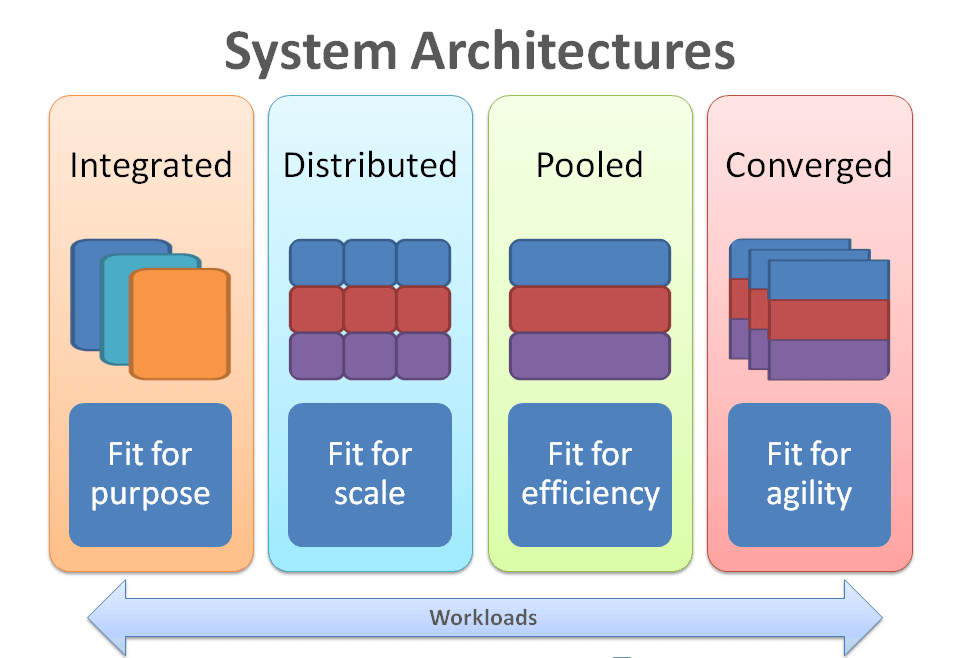

This plan outlines the steps to follow in building a distributed architecture for your system, with Docker Swarm, MongoDB/PostgreSQL replication, local and cloud nodes, database synchronization, security, and monitoring.

The goal is to ensure high availability, data consistency, and resilience across different geographic locations like Douala(Cameroon), Yaoundé (Cameroon), and your cloud servers.

1. Architectural Design

a. Define Key Components

- Local Nodes: You will deploy nodes in Douala and Yaoundé, which will handle local read/write operations.

- Cloud Swarm: The cloud servers will serve as the primary database cluster and provide redundancy and failover options.

- Services: You need to identify which services (Django APIs, databases, front-end apps) will be distributed across these nodes.

- Database Replication: Plan for multi-master replication for MongoDB and asynchronous replication for PostgreSQL.

b. Define the Communication Protocol

- Use IPsec (strongSwan) over MPLS for secure communication between local nodes and the cloud.

- Ensure that all database synchronization traffic, API requests, and management interfaces are routed securely through this encrypted tunnel.

c. Swarm Structure

- Local Docker Swarms for Douala and Yaoundé.

- Cloud Docker Swarm to manage and synchronize services globally.

- Overlay Network to allow services to communicate across these swarms using Docker Swarm’s multi-host networking feature.

2. Database Architecture

a. MongoDB Setup

- Implement MongoDB replica sets for high availability and read scaling.

- Use sharding if you expect large-scale datasets that need to be distributed across multiple nodes.

- Each local node (Douala, Yaoundé) will have secondary nodes for reads and writes. Local nodes can promote themselves to primary in case of a cloud failure.

- When the network between the cloud and local nodes is restored, MongoDB’s replication will ensure that all writes on local nodes are synchronized back to the cloud primary.

b. PostgreSQL Setup

- Use logical replication for PostgreSQL, allowing changes on local nodes (Douala, Yaoundé) to be replicated to the cloud node.

- Set up PostgreSQL replicas for reads on local nodes to reduce latency and improve performance.

- In case of a network partition, local writes will be queued and eventually synchronized back to the cloud when the connection is restored.

c. Handling Conflicts and UUIDs

- Ensure that UUIDs are generated in such a way that there is no conflict between local and cloud databases.

- You might use a time-based UUID generator to avoid conflicts in user IDs or records created across different nodes.

3. Service Deployment Plan

a. Local Node Services

- Deploy Django APIs (Identity Provider, User Management, etc.) and front-end applications to the local nodes (Douala and Yaoundé).

- Use Docker Compose for service orchestration on each local node.

- Ensure that local databases and APIs are deployed and accessible, with redundancy for high availability.

b. Cloud Swarm Services

- The cloud swarm will run core services like API gateways, front-end apps, and the primary databases.

- Deploy Kong API Gateway in the cloud and have it route traffic from Douala and Yaoundé to the appropriate services.

- The cloud swarm will also host centralized logging and monitoring services.

c. Service Synchronization Between Swarms

- Use Docker Swarm’s overlay network to link the local swarms and cloud swarm.

- Each local swarm should be able to sync data and service states to the cloud swarm through strongSwan IPsec.

4. Networking and Security

a. IPsec (strongSwan) Configuration

- Install and configure strongSwan on all local nodes and cloud nodes to create an IPsec VPN for secure traffic.

- Set up MPLS with your provider to handle traffic routing between nodes.

- StrongSwan will handle encryption, while MPLS handles the efficient routing of traffic across nodes.

b. Firewall Rules and Access Control

- Limit access to services using firewalls and ensure that only trusted IPs can communicate between local and cloud nodes.

- Use SSH keys and role-based access control (RBAC) for administrative access to servers.

- Ensure SSL/TLS encryption for all communication between APIs, databases, and end-users.

5. Monitoring and Logging

a. Centralized Monitoring with Prometheus & Grafana

- Set up Prometheus in the cloud swarm to monitor all nodes and services.

- Use Grafana to visualize metrics like CPU usage, disk I/O, memory consumption, and database query times.

- Configure alerting rules to notify you of issues such as high latency, service failures, or network partitions.

b. Centralized Logging with ELK Stack (ElasticSearch, Logstash, Kibana)

- Install Logstash on local nodes (Douala, Yaoundé) to collect logs from local services.

- Forward logs from Logstash to Elasticsearch running in the cloud swarm for centralized analysis.

- Use Kibana for visualizing logs and identifying errors, security issues, or traffic spikes.

6. CI/CD for Automated Deployment

a. Continuous Integration/Continuous Deployment (CI/CD) Pipeline

- Set up a GitLab CI/CD pipeline to handle automated deployments to local nodes and cloud swarm.

- Deploy front-end apps, Django APIs, and databases through the pipeline.

- Ensure that the CI/CD pipeline can detect which environment (local or cloud) the service is being deployed to and adjust configurations accordingly.

b. Environment-Specific Configuration

- Use environment variables to separate development, staging, and production environments.

- Ensure that PostgreSQL and MongoDB configurations are different for local nodes and cloud nodes to prevent data conflicts.

7. Failover and Disaster Recovery

a. Local Node Failover

- In case one of the local nodes (Douala or Yaoundé) fails, the other local node should take over as the active read/write instance.

- Ensure that Django APIs and databases on each node are configured for automatic failover.

b. Cloud Node Failover

- If the cloud connection is lost, the local nodes will continue operating independently.

- Upon restoring the connection, ensure that the databases automatically synchronize all data to the cloud.

- Implement backups for both local and cloud databases, with automated recovery scripts in case of major failures.

c. Disaster Recovery Planning

- Regularly test failover scenarios by simulating the loss of a local node or cloud node to ensure your failover mechanisms work as expected.

- Implement data backups for all critical services, including front-end apps, Django APIs, and databases.

8. Testing and Validation

a. Network and VPN Testing

- Test the IPsec VPN connections to ensure all local nodes can securely communicate with the cloud swarm.

- Validate the MPLS routing to ensure that traffic is being routed efficiently and with minimal latency.

b. Service Performance Testing

- Conduct load testing to ensure that local nodes and cloud nodes can handle the expected traffic.

- Use tools like Apache JMeter to simulate real-world traffic and identify performance bottlenecks.

c. Database Replication Testing

- Simulate network partitions to ensure that local nodes handle read/write operations properly when disconnected from the cloud.

- Test the synchronization of MongoDB and PostgreSQL data between local nodes and the cloud once connectivity is restored.

Final Thoughts:

This plan gives you a comprehensive roadmap for deploying and managing a distributed architecture across local nodes in Douala and Yaoundé, with a centralized cloud swarm. By following this, you’ll have a robust system capable of handling local workloads while maintaining centralized control and data integrity.